Results

Datasets

This section showcases datasets created within the HYBRIDS project. These datasets are designed to support research on disinformation, abusive language, and public discourse analysis. They are periodically updated and made available to the research community to foster collaboration and advance knowledge in the field.

MetaHate: A Unified Dataset for Hate Speech Detection

MetaHate compiles over 1.2 million social media posts from 36 datasets, providing a unified resource for hate speech detection research. Available in TSV format with binary labels, it supports computational linguistics and social media analysis. Access via Hugging Face, with subsets open and full data requiring agreements.

MultiClaimNet: Massively Multilingual Dataset of Fact-Checked Claim Clusters

MultiClaimNet is a massively multilingual claim clustering resource designed to support scalable automated fact-checking. It comprises three claim cluster datasets constructed from claim-matching pairs, covering claims written in 86 languages. The largest dataset contains 85.3K fact-checked claims grouped into 30.9K clusters.

MultiCaption: Dataset for detecting disinformation using multilingual visual claims

MultiCaption is a multilingual dataset designed to support misinformation detection by identifying contradicting captions associated with the same visual content. The dataset contains 11,088 visual claims across 64 languages.

Migration Sentiment Analysis Dataset from Portuguese Political Manifestos (2011, 2015, 2019)

This dataset contains a collection of migration-related sentences extracted from Portuguese political party manifestos from the 2011, 2015, and 2019 legislative elections. Each entry includes the original sentence in Portuguese, sentiment analysis scores (positive, negative, and neutral probabilities), and the specific migration-related term that appears in the text.

.

Software and Models

Here, you will find software tools and computational models developed as part of the HYBRIDS project. These resources include AI-driven models for disinformation detection, NLP applications, and hybrid intelligence systems. The section will be continuously updated to provide access to the latest innovations and contributions from the project.

MetaHateBERT

MetaHateBERT is a fine-tuned BERT model specifically designed to detect hate speech in text. It is based on the bert-base-uncased architecture and has been trained for binary text classification, distinguishing between ‘no hate’ and ‘hate’.

CT-BERT-PRCT

A specialized BERT model fine-tuned to detect Population Replacement Conspiracy Theory (PRCT) content across social media platforms. The model demonstrates good performance in identifying both explicit and implicit PRCT narratives, with decent cross-platform and multilingual generalization capabilities.

Llama-3-8B-Distil-MetaHate

Llama-3-8B-Distil-MetaHate is a distilled version of the Llama 3 architecture, fine-tuned for hate speech detection and explanation. Developed by the Information Retrieval Lab at the University of A Coruña, this model employs Chain-of-Thought reasoning to enhance interpretability in hate speech classification tasks. The model aims to not only detect hate speech but also provide explanations for its classifications.

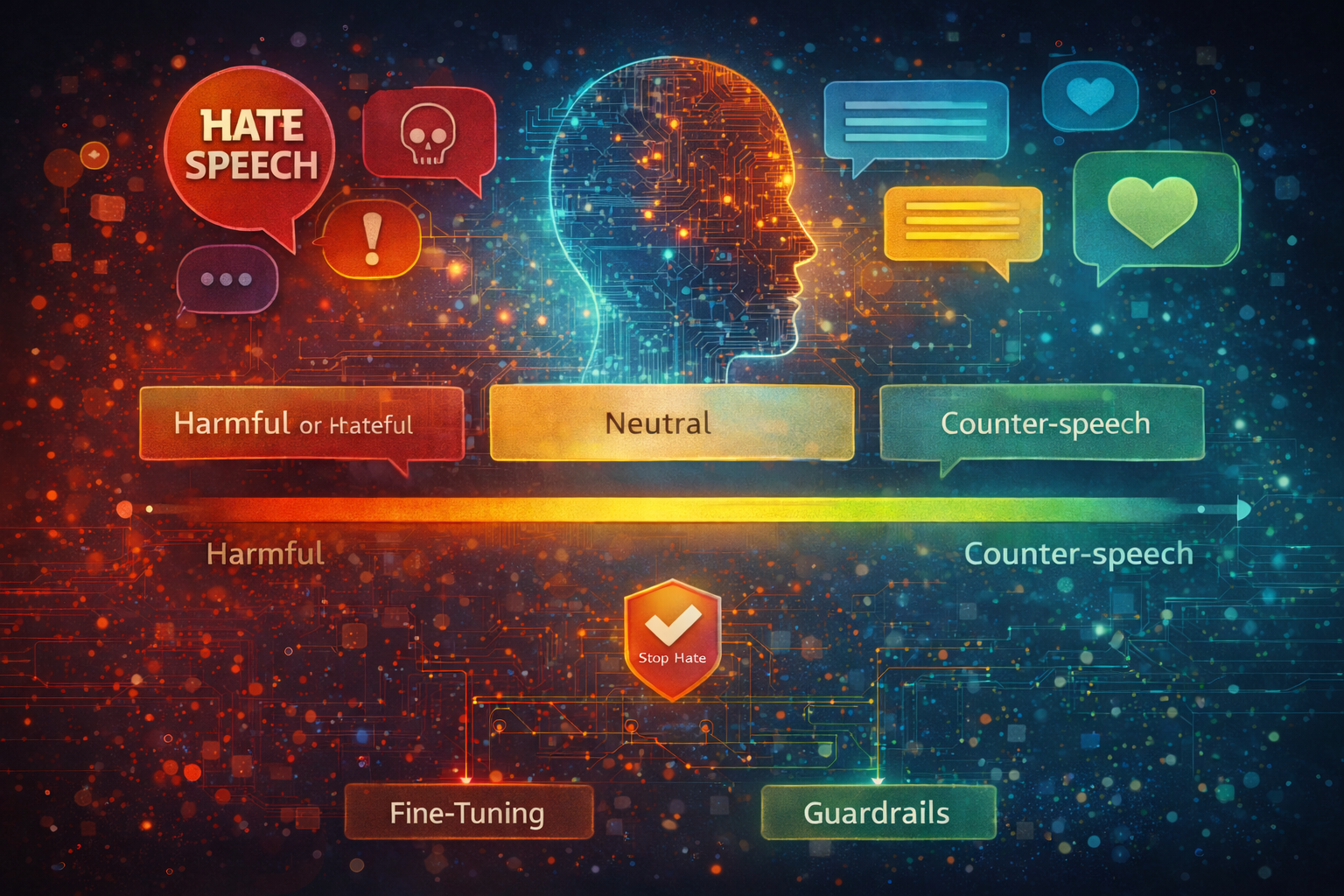

Decoding Hate: Exploring Language Models’ Reactions to Hate Speech

This study analyses how large language models respond to hateful inputs in open text generation. Comparing seven state-of-the-art models, the authors assess whether they produce harmful content, neutral responses, or counter-speech.

WATCHED: A Web AI Agent Tool for Combating Hate Speech by Expanding Data

Online harms, especially hate speech, threaten user safety and trust on digital platforms. WATCHED is an AI chatbot designed to assist content moderators in detecting and addressing hate speech

Can LLMs Evaluate What They Cannot Annotate? Revisiting LLM Reliability in Hate Speech Detection

This repository provides the implementation and evaluation framework for assessing the reliability of Large Language Models (LLMs) as annotators and evaluators in hate speech detection.

Personalisation or Prejudice? Addressing Geographic Bias in Hate Speech Detection using Debias Tuning in Large Language Models

This model is designed for hate speech detection and incorporates geographic and language contextualisation analysis as described in the

paper.

DeepSeek-PRCT

DeepSeek-PRCT is a LoRA fine-tuned DeepSeek-R1-14B model specifically designed to detect Population Replacement Conspiracy Theory (PRCT) content. Trained on informal Portuguese Telegram messages, the model is optimized for formal news discourse and demonstrates exceptional cross-domain transfer to Italian news headlines.

Mistral-PRCT

Mistral-PRCT Mistral-PRCT is a LoRA fine-tuned Mistral-7B model adapted for detecting Population Replacement Conspiracy Theory (PRCT) content. Trained exclusively on informal social media discourse from Portuguese Telegram messages, the model demonstrates strong cross-domain generalization and competitive performance across languages.

Sentiment Analysis of Migration in Portuguese Political Manifestos

A collection of scripts developed to analyze the sentiment of migration-related terms in Portuguese political manifestos from 2011, 2015, and 2019. The repository includes computational tools for data integration, text processing, and sentiment classification using multilingual BERT and

CodeFlow: Automating the Flow of Code with LLMs

CodeFlow is a research tool tailored for Digital Humanities and Social Science research that automates code generation and refinement through iterative dialogue with large language models. By handling technical implementation details automatically via an iterative debug-fix loop, CodeFlow enables researchers to focus on their core questions while maintaining computational rigor.

Publications in Journals

This section features peer-reviewed journal articles produced within the HYBRIDS project. These publications contribute to advancing research on disinformation detection, public discourse analysis, and hybrid intelligence applications. Each article undergoes rigorous academic review, ensuring high-quality scientific contributions. The section will be continuously updated to reflect the latest findings and insights from the HYBRIDS consortium.

M. J. Maggini, D. Bassi, P. Piot, G. Dias, P. G. Otero, “A systematic review of automated hyperpartisan news detection,” PLOS ONE, vol. 20, 2024, https://doi.org/10.1371/journal.pone.0316989.

L. Nannini, E. Bonel, D. Bassi, M. J. Maggini, “Beyond phase-in: assessing impacts on disinformation of the EU Digital Services Act,” AI and Ethics, 2024, https://doi.org/10.1007/s43681-024-00467-w.

R. Panchendrarajan, A. Zubiaga, “Claim detection for automated fact-checking: A survey on monolingual, multilingual and cross-lingual research,” Natural Language Processing Journal, 2024, https://doi.org/10.1016/j.nlp.2024.100066, repository: https://doi.org/10.48550/arxiv.2401.11969.

D. Bassi, S. Fomsgaard, M. Pereira-Fariña, “Decoding persuasion: a survey on ML and NLP methods for the study of online persuasion,” Frontiers in Communication, vol. 9, 2024, https://doi.org/10.3389/fcomm.2024.1457433.

R. Panchendrarajan, A. Zubiaga, “Expert Systems with Applications,” Expert Systems with Applications, 2024, https://doi.org/10.1016/j.eswa.2024.124097, repository: https://doi.org/10.48550/arxiv.2401.11972.

- E. B. Marino, J. M. Benitez-Baleato, A. S. Ribeiro, “The Polarization Loop: How Emotions Drive Propagation of Disinformation in Online Media—The Case of Conspiracy Theories and Extreme Right Movements in Southern Europe,” Social Sciences, vol. 13, 2024, https://doi.org/10.3390/socsci13110603.

Publications in conferences

Here, you will find conference papers delivered by HYBRIDS researchers at leading international scientific events. These contributions showcase ongoing research developments, methodologies, and results related to disinformation, AI-driven discourse analysis, and hybrid intelligence. By engaging with the broader academic community, these publications foster knowledge exchange and collaboration. The section will be regularly updated with new conference proceedings and presentations.

-

R. Panchendrarajan, R. Frade, A. Zubiaga, “ClaimCatchers at SemEval-2025 Task 7: Sentence Transformers for Claim Retrieval,” 9th International Workshop on Semantic Evaluation (SemEval-2025), Association for Computational Linguistics, July 2025, DOI: 10.5281/zenodo.16792499, https://aclanthology.org/2025.semeval-1.63/, repository: https://zenodo.org/records/16792499.

-

R. Panchendrarajan, R. Míguez Pérez, A. Zubiaga, “MultiClaimNet: A Massively Multilingual Dataset of Fact-Checked Claim Clusters,” Findings of the Association for Computational Linguistics: EMNLP 2025, Association for Computational Linguistics, November 2025, DOI: 10.18653/v1/2025.findings-emnlp.599, https://aclanthology.org/2025.findings-emnlp.599/, repository: https://zenodo.org/records/17854554.

-

D. Bassi, M. J. Maggini, R. Vieira, M. Pereira-Fariña, “A Pipeline for the Analysis of User Interactions in YouTube Comments: A Hybridization of LLMs and Rule-Based Methods,” IEEE Xplore, 2024, https://ieeexplore.ieee.org/document/10883781, repository: https://zenodo.org/records/14917710.

-

E. B. Marino, R. Vieira, J. M. Benitez Baleato, A. S. Ribeiro, K. Laken, “Decoding Sentiments about Migration in Portuguese Political Manifestos (2011, 2015, 2019),” Proceedings of the 16th International Conference on Computational Processing of Portuguese – Vol. 2, vol. 149–159, 2024, https://doi.org/10.18653/v1/2024.propor-2.20.

-

M. J. Maggini, D. Bassi, V. Morini, G. Rossetti, “Diachronic Political Content Analysis: A Comparative Study of Topics and Sentiments in Echo Chambers and Beyond,” Zenodo, 2024, https://doi.org/10.5281/zenodo.14755298.

-

M. Pastor, N. Oostdijk, P. Martín-Rodilla, J. Parapar, “Enhancing Discourse Parsing for Local Structures from Social Media with LLM-Generated Data,” Proceedings of the 31st International Conference on Computational Linguistics, 2025, https://aclanthology.org/2025.coling-main.584/.

-

Y. Li, R. Panchendrarajan, A. Zubiaga, “FactFinders at CheckThat! 2024: Refining Check-worthy Statement Detection with LLMs through Data Pruning,” CLEF 2024: Conference and Labs of the Evaluation Forum, 2024, https://doi.org/10.5281/zenodo.13355043.

-

K. Laken, “Fralak at SemEval-2024 Task 4: Combining RNN-generated hierarchy paths with simple neural nets for hierarchical multilabel text classification in a multilingual zero-shot setting,” Proceedings of the 18th International Workshop on Semantic Evaluation (SemEval-2024), 2024, https://doi.org/10.18653/v1/2024.semeval-1.89.

-

M. Pastor, E. B. Marino, N. Oostdijk, “La reconnaissance automatique des relations de cohérence RST en français,” Actes de la 31ème Conférence sur le Traitement Automatique des Langues Naturelles, 2024, https://aclanthology.org/2024.jeptalnrecital-taln.34/.

-

M. J. Maggini, E. B. Marino, P. Gamallo, “Leveraging Advanced Prompting Strategies in Llama-8b for Enhanced Hyperpartisan News Detection,” Zenodo, 2024, https://doi.org/10.5281/zenodo.14755147.

-

P. Piot, P. Martín-Rodilla, J. Parapar, “MetaHate: A Dataset for Unifying Efforts on Hate Speech Detection,” Proceedings of the International AAAI Conference on Web and Social Media, 2024, https://doi.org/10.1609/icwsm.v18i1.31445.

-

E. Bonel, L. Nannini, D. Bassi, M. J. Maggini, “Position: Machine learning-powered assessments of the EU Digital Services Act aid quantify policy impacts on online harms,” Zenodo, 2024, https://doi.org/10.5281/zenodo.14755218, repository: https://zenodo.org/records/14755218.

-

R. Bandyopadhyay, D. Assenmacher, J. M. Alonso-Moral, C. Wagner, “Sexism Detection on a Data Diet,” Companion Proceedings of the 16th ACM Web Science Conference, 2024, https://doi.org/10.1145/3630744.3663609.

-

M. Pastor, N. Oostdijk, “Signals as Features: Predicting Error/Success in Rhetorical Structure Parsing,” Proceedings of the 5th Workshop on Computational Approaches to Discourse (CODI 2024), 2024, https://aclanthology.org/2024.codi-1.13/.

-

M. Pastor, N. Oostdijk, M. Larson, “The Contribution of Coherence Relations to Understanding Paratactic Forms of Communication in Social Media Comment Sections,” Proceedings of JADT 2024: 17th International Conference on Statistical Analysis of Textual Data, 2024, https://hal.science/hal-04536610.